In this entry I am going to cover a handful of common myths and misconceptions about how SQL Server handles memory. Each of these comes from repeated instances of confusion in my dealings with other SQL Server users. Many of these have come up from even very seasoned DBAs so don’t feel bad if you’ve been laboring under a misconception yourself.

The Myth:

I logged in and found my SQL Server is running at 95% memory utilization. I restarted the box which fixed it temporarily, but it grew and grew right back to 95% within a few hours!! SQL Server must have a memory leak! This needs to be fixed!

It’s not a memory leak. It’s also probably not a problem. SQL Server will allocate memory from the OS as needed and hold that memory essentially forever. SQL Server rarely gives memory back to the OS once it has been allocated. If let to run free SQL Server will eventually strangle the OS by taking all of the available memory leaving nothing for the OS to use for normal operation.

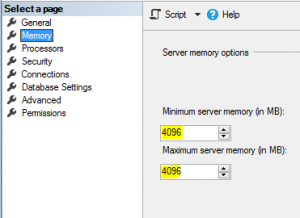

There is a reprieve from this risk, however. The max server memory setting (see screenshot below) allows a DBA to put a cap on the amount of memory that SQL Server will allocate to itself. Most SQL Servers will spend most of their time sitting right at this maximum value so I recommend setting this number below the total system memory amount to allow the operating system some room to operate. At least 4GB difference is recommended, but often more can be required depending on overall server load. If the OS is running at or over 99% memory utilization or the page file is being used heavily, consider reducing the max server memory so that the OS has the ability to properly operate.

UPDATE: The 4GB difference is good for servers up to about 32GB of total RAM, but additional RAM should be left for other processes at a rate of about 1GB per 8GB of system memory above 32GB. Even more should be left if there are other processes like SSRS or SSMS that will need memory.

The Myth:

If I set the min server memory to the same value as the max server memory then SQL Server won’t need to allocate or deallocate memory. It can be fixed at the proper amount at startup and never move.

This is one of the most common misconceptions I hear. SQL Server cannot be fixed to a memory amount in this way. In the following screenshots I have set the min server memory setting to the same as the max server memory setting, 4GB. My laptop has 8GB.

|

Find this screen by right clicking on a server name in SSMS Object Explorer and choosing Properties. |

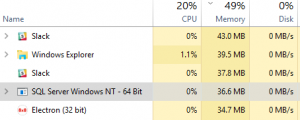

I restarted the service and waited several hours. The memory utilization by the SQL Server service never approaches the min server memory setting.

Immediately after service restart. |

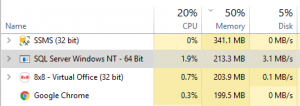

1 hour later |

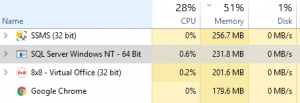

Several hours later. Nowhere near 4GB yet.

|

This is because memory is generally only allocated to SQL Server by requests. If there are no requests, there is no memory allocation. In the words of Microsoft…

The amount of memory acquired by the Database Engine is entirely dependent on the workload placed on the instance. A SQL Server instance that is not processing many requests may never reach min server memory. –https://technet.microsoft.com/en-us/library/ms180797(v=sql.105).aspx

So what does the min server memory setting mean then? The min server memory setting means tell the Database Engine how much memory to keep in a scenario where it would otherwise return memory to the OS. If SQL Server had allocated more memory than the minimum server memory amount and needed to return some to the OS for some reason, it would NOT release RAM below the minimum amount. If a request comes in to SQL Server to release RAM and it hasn’t yet reached the minimum, it will not comply with the request.

In the modern VM world it is used by the balloon memory driver. If the balloon memory driver decides that due to an over-allocation on the host and a sudden need for more RAM that some VMs are going to have to lose some of their currently allocated memory, the balloon memory driver will respect the min server memory setting and make sure to leave the VM with at least that much memory. As such, I recommend that this setting be set slightly below the max server memory to allow the balloon memory driver some memory in this hopefully-rare scenario.

The Myth:

My VM administrator says that I’m not using all the memory I asked for. In fact, 70% of it is idle at any given time. We’re going to return that memory to the resource pool to better utilize it on other VMs.

The VM administrators are not lying or misinterpreting what is on their screen. The metrics displayed on their management tool (Microsoft Hyper V Manager or VMWare vSphere Client) are lying to them. When the VM management tool is checking on memory activity it asks the OS. The OS only knows that this memory was allocated to SQL Server a long time ago and that it hasn’t moved since. It appears to the OS to be stagnant and unused and this is what it reports to the VM management tool. However, this memory, once allocated to SQL Server, is in the domain of SQLOS which is likely very actively using this memory in a way that is largely invisible to the OS, and by extension, the VM management tool and eventually the VM administrators themselves. The VM tools are not yet smart enough to ask SQLOS what it is going on with the memory and report falsely that the memory is not being effectively utilized.

What should I check instead? There are many memory metrics available that, when combined, tell the whole memory story, but I am partial to Page Life Expectancy(PLE), especially in OLTP environments. This one statistics give a very macro look at the memory health of a SQL Server. Read more about PLE and what constitutes a good vs bad value here. This query will show PLE values for an instance of SQL Server.

SELECT [object_name], counter_name, instance_name, cntr_value FROM sys.dm_os_performance_counters WHERE counter_name = ‘Page life expectancy’

The Myth:

SQL Server doesn’t want more memory. My VM is set to auto allocate more memory if the need arises and it hasn’t done that. If I really needed more memory that would have happened by now.

Consider the facts from the first myth on this page. SQL Server’s memory allocation cannot grow beyond the max server memory setting. Since SQL Server knows that it can’t ask the OS for more, it doesn’t. Only a non-SQL Server operation could cause an apparent memory need to make its way to the VM host to be resolved via a larger memory grant and, by rule, this memory could not find its way to SQL Server anyway.

Great post addressing some common myths.

The VM memory usage issue is an argument that every DBA running machines in a virtual environment should be prepared to make and win. The metrics shown in vCenter will always give the picture that SQL Server is wasting memory because it is not using it.

I’ve had several servers that historically were given say 32 GB of memory for a Standard version install, which was fine for what they were doing at the time. When we went P2V it was suggested that we lower the allocated memory because the host could do so much magic. I found the opposite in the PLE was lower, I was seeing lock resource alerts, etc.

I have several reports I use that display how SQL Server is using memory and they detail this use by type such as buffer pool, connection, etc. With these I can demonstrate that not only is SQL Server using all this memory, it is starving for more. That min/max memory setting for SQL Server isn’t the end all setting like too many think.

I argued that this VM should be allocated 96 GB of memory with 84 GB going to the pool and the other 12 going to whatever other needs SQL Server has and the OS. The result was a happy server and the application utilizing it. My report showed that the server was no longer under memory pressure. It can be argued that the 12 GB left for other SQL needs and OS is a bit much, but I’m arguing for the systems I manage and not the vCenter environment even though I have a hand in managing that as well.

Adding memory to the hosts in a vCenter environment may not be cheap, but it is something any admin needs to be realistic about. Sure you have to do it in all servers and if you have a DR twin, then there too. This is likely less expensive than adding a blade, licensing for VMware, Veeam and all the other items.

Nice article! Thanks for shining a little light on these myths.